Containerizing an ML Model - Part 1

Prerequisites

- Conda (miniconda or anaconda)

- Docker

Description

This is the first of a series of posts that will explore how to deliver machine learning models as microservices. The end goal will be to run these microservices within a Kubernetes cluster. Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications.

Since the main point of Kubernetes is to manage containerized applications, we need to create our containers first. But first we need to create our application. This first part will show how to expose a spaCy named entity recognition (NER) model as a REST api using flask.

The Application

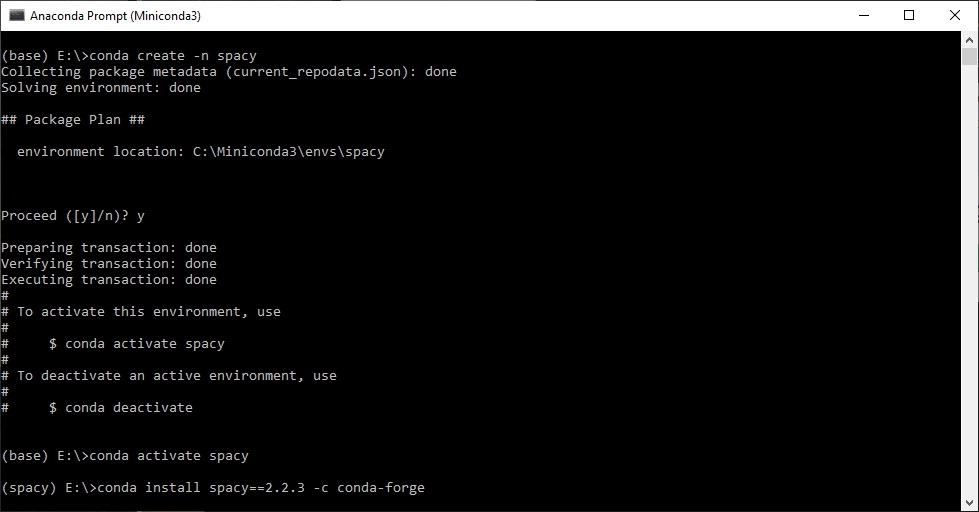

From the Anaconda Prompt create a conda environment named spacy, activate the environment, and then install spacy.

After installation install the en_core_web_sm model, this will be the model we will use to extract named entities from a text. For mode information on this particular model please refer to the spaCy documentation for English pretrained models

Install flask, the web framework we will use to host our app as a REST api.

Write your application and save it to a file called ner.py. Basically the app has only one route /entities which accepts POST requests where the body of the requests is plain text. It responds with a json containing a list of the named entities found and their start and end position within the text. If an error is encountered a json response will be provided indicating an ‘Internal Server Error’, code 500, and any information useful to debug the error. Since this is meant to be called by another service the responses are not in the form of HTML since there is no need to display the results in a browser.

from flask import Flask, request, Response, json

import spacy

import sys

from pathlib import Path

app = Flask(__name__)

status = {}

try:

nlp_model = spacy.load('en_core_web_sm')

status["isready"] = True

status["message"] = ""

except BaseException as error:

status["isready"] = False

status["message"] = "Error 3:" + str(sys.exc_info()[0]) + str(error)

@app.route("/entities", methods=['POST'])

def get_entities():

try:

if(status["isready"] == False):

return Response(json.dumps({

"code": 500,

"name": 'Internal Server Error',

"description": status["message"],

}), status=500, mimetype='application/json')

text = request.get_data()

result = []

doc = nlp_model(str(text))

for ent in doc.ents:

output = {}

output["label"] = ent.label_

output["text"] = ent.text

output["start"] = ent.start_char

output["end"] = ent.end_char

result.append(output)

return json.dumps(result)

except BaseException as error:

return Response(json.dumps({

"code": 500,

"name": 'Internal Server Error',

"description": "Error 3:" + str(sys.exc_info()[0]) + str(error),

}), status=500, mimetype='application/json')

if __name__ == "__main__":

app.run(host='0.0.0.0')

Test your app using using your favorite tool by sending a request to localhost:5000/entities. If everything works you should see a result like the one below.

In the next part Containerizing an ML Model - Part 2, we will create the docker container for our app.

Sample Code

https://github.com/erotavlas/Containerizing-an-ML-Model

References

Salvatore S. © 2020